Zoe Horn and Ned Rossiter

“Images exist insofar as their media-habitats, ecosystems, and social practices exist and function to provide the structure of cognitive patterns for them.”

Lydia H. Liu, The Freudian Robot: Digital Media and the Future of the Unconscious (Chicago and London: University of Chicago Press, 2010), 219.

The public debut of ‘generative’ AI tools in late 2022 spawned a flurry of excitement and also consternation among exuberant tech-bros, emoting politicians, conflicted creatives and the curious at large. Widespread anxieties about the impacts of this new wave of automation across society and economy failed to restrain an almost feverish interest. Tinkering and ‘prompt-engineering’ swiftly inducted recombinations of text and images into computational training routines. We critically probe the nexus between generative technologies such as ChatGPT (text-to-text) and Midjourney (text-to-image) and an emergent episteme figured around the movement of data.

The emergence of neural networks and deep learning techniques in the 21st century registers a step change, rather than departure point, in the genealogy of technological generativity. Needless to say, we find in the gravitation of attention to generative technologies a scale of extension into the realm of social and cultural production that warrants the claim that we are in the midst of an epistemic shift. Once any technological form is ubiquitous, conditions are established that instantiate new grammars of expression, new orderings of things, new technical ensembles that structure and organize the milieu of perception and cognition. Such a technological environment, we maintain, defines the conjunctural horizon of the future-present.

We question why the terms ‘generative’ and ‘generation’ are appended to the algorithmic routines of the class of computational operations widely referred to as large language models (LLMs). The term ‘prompt’ might be another. First, though, ‘generation’: in the midst of the technical-epistemic ensemble of LLMs, it is hard not to be prompted to pursue a genealogy of generation. Is there a continuum that traffics with this term, carrying us from the industrial epoch of carbon capitalism to a Darwinian episteme of evolutionary species-being? Can we suppose, like Bateson, that there are ‘patterns which connect’?[1] And, if so, is this a computational condition or messianic order?

The ‘generative’ turn in AI indexes a paradigm shift instituted by the massive scalar expansion in computational calculation, one that is contingent on the availability of vast quantities of training data but is now in the business of aggregating and enclosing the data commons. Generative AI is fuelling an extraordinary churn of novel ‘synthetic’ data streams, including but not limited to synthesized images, 3D models, texts, music, and short videos. Triggered by a short text prompt, DALL-E 2 generates realistic images in a software-specific aesthetic. Operating in a post-cybernetic idiom, the automated image combines distinct and unrelated objects in semantically calculable ways to assume the status of original.[2]

In a future draft of this paper, we will elaborate how this bears upon our argument that generative AI registers an emergent episteme. The political implications of this shift are not yet clear. What, for instance, is the status of the labour theory of value or, conversely, a machine theory of labour within such a paradigm? At a base level, there’s an analytical imperative to better understand how circuits of data are constitutive of a larger social-technical episteme taking root within generative technologies.

We propose, and will study further as we develop this text, that the history of generation as a term is coincident with machine procedures, from which we begin to discern an emergent episteme specific to generative technologies associated with LLMs. Within this technical episteme, we define movement and anti-aesthetics as a class of elements assigned to the training of AI models coextensive with the political economy of elastic computing able to scale client demands for transmission, storage and processing. We consider how this episteme manifests through our own experiments with text and image generation via ChatGPT and Midjourney. Again, in future iterations of this paper, we explore this computational condition in terms of a paradox of time peculiar to what Peter Osborne calls the ‘disjunctive conjunction’ of the contemporary.[3]

Analytical Currency of the Episteme

What is the significance of an episteme? Why might our critical attention be drawn to a constellation of technical rules, standards and procedures that define and organize grammars of expression? We note a tendency in recent years across media studies, STS and anthropology to make an assortment of statements and claims regarding current and emergent epistemic conditions, often aligned with a particular manifestation or practice of digital culture or society. But is the term epistemic or episteme doing anything more than servicing what might otherwise be understood as something more like ‘knowledge production’? In other words, what is the status and social-cultural implication of the epistemic as distinct from knowledge production? And what are we declaring, in this paper, in our claim of a new epistemic horizon attributed to the advent of generative technologies?

In thinking the relation between technology and epistemology, German media theorist Friedrich Kittler’s maxim remains provocative: ‘Media determine our situation’.[4] Media form the infrastructural condition, the quasi-transcendental architecture or media a priori, for experience and understanding.

Within the analytical-political stakes pursued across the writings by Bernard Stiegler, the technology-epistemic couplet plays out in the first instance on the surface of cognition. For Stiegler, like Kittler, different technological forms organize cognitive processes in increasingly abstract ways across the iterative passage of historical time. Lydia Liu offers a perhaps unintentional synthesis of Kittler and Stiegler in her book The Freudian Robot where she writes: ‘Images exist insofar as their media-habitats, ecosystems, and social practices exist and function to provide the structure of cognitive patterns for them’.[5]

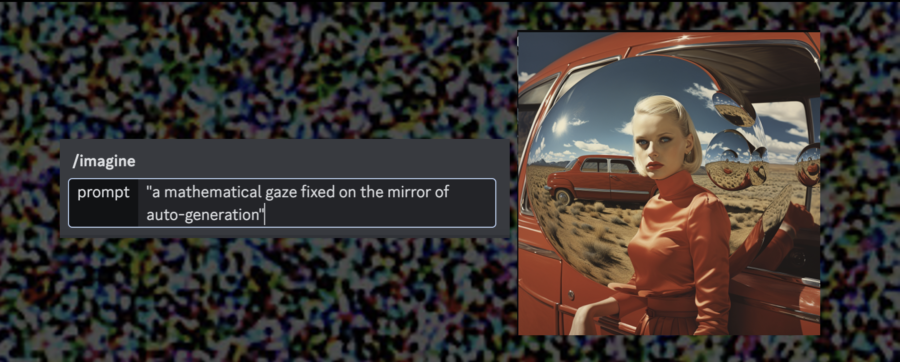

Our interest in this paper is to partition our attention, focussing on LLM’s and their technical logics that calculate the generation of text and images. The suite of technologies within the family of LLMs are largely defined by neural network architectures that ‘learn’ embedded functions between data points and perform operations that synthesize data. This operative logic has strengthened AI’s functionalities around pattern detection and identification, but also the generation of new data provided during training. It is precisely this capacity to generate new data from within the continuously defined data field of machine operations of LLMs that we find a basis to claim a post-cybernetic event horizon. In his critique of the pervasiveness of mechanistic analogies of the mind, Matteo Pasquinelli writes, ‘In reality, cybernetics was not a science but a school of engineering in drag’.[6] By extension, we can understand the post-cybernetic idiom of large language models and machine images as next level, beyond engineering in drag and instead immersed in full-frontal transition of self-organization with a mathematical gaze fixed on the mirror of auto-generation.[7]

What do Machines Learn?

Machine learning describes a kind of ‘generational’ confrontation within AI itself – a step in a line of technological and algorithmic descent characterized by still-emerging computational ‘learning’ systems related to perceiving, inferring, and synthesizing. Machine learning is performed by vastly different ranges in complexity and specialization but can be difficult to differentiate within the general AI discourse. Machine learning and deep learning, for instance, are rarely distinguished from one another, though the latter is a subset of the former, intended to handle larger and more complex datasets using multiple (‘deep’) layers of artificial neural networks. The computational architecture of deep learning is inspired by the structure and functioning cognitive apparatus of the human brain. In the case of the former, procedural layers are organized by interconnected nodes, or networks of artificial neurons, that process and transmit data.

The large language models (LLMs) that have engulfed the AI discourse and imagination of the past 6–12 months are very large deep learning models whose learning processes are first tested and developed with pre-training on vast amounts of data. In both machine learning and deep learning, the training process can be supervised, unsupervised, and based on reinforcement. In the first case, supervised learning, the algorithm is trained to make predictions or classify new data based on a labeled dataset. In unsupervised learning, the algorithm receives input data and gradually learns to identify correlations, similarities, and differences among the data until it derives recurring patterns and structures. In the case of reinforced learning, the process is typically focused on a series of decisions and based on a reward system when the desired decision is taken.

Within the process of LLM image and text generation, latent space, also known as embedding space, refers to a lower-dimensional, abstract representation of data that captures the underlying structure and variations in the original high-dimensional data space. This dimensionality reduction simplifies the modeling process and makes it more tractable, especially when dealing with complex and high-dimensional data. Latent space can be understood as a compressed, more organized spatial representation of the world (to a computer), where different data points with similar characteristics are located more closely to one another. A generative model learns to map data points from the latent space back to the original data space, generating new data instances that resemble those in the training dataset. The process of mapping data points from the latent space to the original data space is usually called generation, though it is also referred to as decoding.

Text-to-image generation uses natural language processing (NLP), large language models (LLMs), and diffusion processing to produce digital images.[8] At the time of writing, more recent generative AIs rely on a ‘stable’ diffusion process, whereby a first phase is carried out by a transformer model, and consists of image encoding through a neural network pre-trained on a large-scale dataset containing billions of image-text pairs.[9] The training produces the embeddings within the latent space that are combined to form a joint representation of image and text, capturing the semantic ‘meaning’ of both. This process is central to image and text classification tasks and object detection, for example. In stable diffusion models, further passages convert a text prompt into text and image embeddings, which the diffusion neural network transforms into images.

Models of Memory, Engineering Experiments

Learning and memory are closely related concepts. We will assume in this meeting and with the constraints of time that there’s no need here to revisit the long disciplinary histories and preoccupations, from cognitive to computer sciences, that trouble the possibility of speaking of human and machine memory in the same breath. If learning, at its core, is the acquisition of knowledge, memory might be understood as the expression of what’s been acquired. LLMs, in this case, and more precisely their generation, present an interesting model – and modeling – of memory to the extent that they can be understood to express a form of knowledge production and retention. Such a model is not fixed in time at the instance of pre-training, but a mode of learning that we might extend to include the ongoing forms of re-training, fine-tuning, stuffing, conversational memory and other techniques and experiments of refinement that accumulate, process and analyze data. A core objective here is to adapt foundation models and hone more task-specific, descendent generative AI models and tools. In this regard, the model proliferates a family or class of variants within the parametric contours of the prompt.

How can we make sense of DALL-E or Stable Diffusion image outputs as memory, or expression of a machine’s learning predicated on retention? For one thing, even though we know that these generator machines can differ in how they capture and reproduce data distributions, organize latent space and in how they are trained in the procedures used for learning the mapping, we also know they operate using the same principle: make outputs that look like the training data. Insofar as their expressions can be recognised as distinct, novel, unique or original to any individual image of the training set, we can recognise such outputs as the work of an incalculable (to the human) schema of vectors internal to the operative logic of the machine.

So while we perhaps cannot decode the machine’s knowledge from any given image output, in the way that a memory does not easily reveal how it came to be acquired, we can know, for lack of a better word, the experience of the machine. We generate its learning environment. And even though, in the strictest sense of its computational operations, a model can stop ‘learning’ once it is trained to the satisfaction of its human handlers, in practice we know these machines have been unleashed into the wild precisely because they are understood to be partial. Models like DALL-E 2 or GPT 4 are trained on vast, broad data using self-supervision at scale. They are understood as a ‘foundation model’, a concept or at least terminology coined by Rishi Bommasani and his massive team of co-authors to underscore their ‘central yet incomplete character’.

For computer scientists, this ‘incompleteness’ can be understood as central to the model’s emergent capabilities and properties when brought to bear on a seemingly endless set of future tasks, at scale.[10] They seek to be ‘upgraded’ to ‘expert’ or niche models to demonstrate a ‘sharper’ knowledge of specific topics. They require users to manipulate and experiment with their ‘sensorial’ environment via new and imaginative techniques of engineering, in order to adapt them for a wide range of downstream tasks.

From Kinaesthetics to Anti-Aesthetics, or, the Calculation of Cognition

The proliferation of automated texts and images generated by large language models (LLMs) is accompanied by a subsequent depletion of sensation, registering the centrality of an anti-aesthetics specific to this machinic episteme. To the extent we may conceive this relation as a computational variation of kinaesthetics, the experience of sensation is sublimated into a form of neural hyperstimulation galvanized by a kind of libidinal drive not so different from that of the addict, frantic in their search for the next hit. As much as we might indulge in poetic speculations figured around the technics of sensation, our focus instead is on how an anti-aesthetics pervades the technically-driven machine operations and infrastructural facilities that utilize neural networks to detect patterns and structures within existing data. New grammars of expression are organized computationally in the form of nascent typologies.

The term ‘anti-aesthetics’ recalls, as many of you will remember, one of the anchor points that populated the delightful delirium of the eighties and early nineties when postmodernism trafficked across the horizon of cultural production. Here, we are thinking of Hal Foster’s edited collection, The Anti-Aesthetic: Essays on Postmodern Culture, published by Bay Press in 1983.[11] As it turned out, the title had more to say about the anti-aesthetic than the book’s contents. But dancing with the surface of meaning never troubled postmodern purists. So what bearing might the anti-aesthetic have on the pattern recognition attributed to deep learning?

In his preface, Hal Foster does actually make a few framing remarks on the anti-aesthetic. He writes:

… anti-aesthetic is the sign not of modern nihilism – which so often transgressed the law only to confirm it – but rather a critique which destructures the order of representations in order to reinscribe them.

‘Anti-aesthetic’ also signals that the very notion of the aesthetic, its network of ideas, is in question here: the idea that aesthetic experience exists apart, without ‘purpose’, all but beyond history, or that art can now effect a world at once (inter)subjective, concrete and universal – a symbolic totality. Like ‘postmodernism’, then, ‘anti-aesthetic’ marks a cultural position on the present: are categories afforded by the aesthetic still valid? (xv)

A few of the key categories we might associate with the aesthetic would include terms such as beauty, sublime, autonomy, transcendent, transgressive, even ‘resistance’ – a key ‘strategy of interference’ Foster invokes in setting out the case for postmodern culture. None of these terms would seem to apply when transposed to machine generated images, though arguably there is a certain beauty to Midjourney filters that cast a pharmacological glaze over the screen of the world. Other, more interesting, aesthetic categories such as sense and sensation are more pregnant with possibility when thinking the logic of machine images. But only insofar as this is a negative operation of depletion, of numb saturation that crushes synaptic circuits in the brain as neural networks calculate correspondence within iterative procedures of accumulation spat out as singular renditions of the computational aesthetic designed to add incremental variation to baseline data sets.

Translating Kinaesthesis

If kinaesthetics is a study of body motion and self-perception in relation to one’s own body, maybe the computational processes and outputs of large language models most resemble a kind of kinaesthesis, or muscle-memory, rather than a form of cognition? Data are synthetically fashioned from computational procedures of recursivity and recombination to generate iterative outputs. This is what we understand as a post-cyberentic operation, where externalities of noise and feedback fade away within a system that is internally generative. There is no cognitive determination going on here, as Katherine Hayles, Bernard Stiegler and others have examined at length.[12]

Machine deep learning is not analogous to cognitive processes of perception and deduction. To presume so is a problem of ‘epistemic translation’, as Matteo Pasquinelli points out in his recent book, The Eye of the Master: A Social History of Artificial Intelligence.[13] We might settle with the idea of a new class of nonconscious cognition (Hayles), but such a hypothesis doesn’t sufficiently probe how the proliferation of machine images contour the landscape of human perception and service the world’s repository of images that comprise the endless repertoire of aesthetic expression – even if the balance of scales tips toward the blunt coldness of the computational anti-aesthetic.

Paper presented at Exo-Mnemonics: Memory, Media, Machines, Symposium, Western Sydney University, 6–7 November 2023.

[1] Gregory Bateson, Mind and Nature: A Necessary Unity (Cresskill, New Jersey: Hampton Press, 1979), 7–8.

[2] The automated image can also perform image manipulation and interpolation with existing images.

[3] Peter Osborne, ‘Working the Contemporary: History as a Project of Crisis Today’. In Crisis as Form (London and New York: Verso, 2022), 3–17.

[4] Friedrich A. Kittler, Gramophone, Film, Typewriter, trans. Geoffrey Winthrop-Young and Michael Wutz (Stanford: Stanford University Press, 1999), xxxix.

[5] Lydia H. Liu, The Freudian Robot: Digital Media and the Future of the Unconscious (Chicago and London: University of Chicago Press, 2010), 219.

[6] Matteo Pasquinelli, The Eye of the Master: A Social History of Artificial Intelligence (London: Verso, 2023), 152.

[7] See also Jean Baudrillard, The Mirror of Production, trans. Mark Poster (St. Louis: Telos Press, 1975).

[8] Generative adversarial networks (GANs) are, generally speaking, a predecessor of diffusion models.

[9] The first release of Dall-E, for example, was optimized for image production using a trimmed-down version of the GPT-3 LLM, employing 12 billion parameters (instead of GPT-3’s full 175 billion) trained on a dataset of 250 billion image-text pairs.

[10] Rishi Bommasani et al., ‘On the Opportunities and Risks of Foundation Models’, Center for Research on Foundation Models (CRFM), Stanford Institute for Human-Centered Artificial Intelligence (HAI)

Stanford University, 2022, https://arxiv.org/pdf/2108.07258.pdf?utm_source=morning_brew

[11] Hal Foster, The Anti-Aesthetic: Essays on Postmodern Culture (Seattle: Bay Press, 1983).

[12] N. Katherine Hayles, Unthought: The Power of the Cognitive Nonconscious (Chicago and London: University of Chicago Press, 2017). Stiegler considers this issue as a social crisis on a mass, trans-generational scale across many of his works. See, for instance, Bernard Stiegler, Taking Care of Youth and the Generations, trans. Stephen Barker (Stanford: Stanford University Press, 2010) and Bernard Stiegler, The Age of Disruption: Technology and Madness in Computational Capitalism, trans. Daniel Ross (London: Polity, 2021).

[13] Pasquinelli, 153.